I recently read Mastery, by Robert Greene. In the book, Greene explores masters of their craft, and the paths they took to get there. Obtaining the title of "Master" is not done overnight, but there are daily strategies you can employ to get there. After reading this book, I noticed a lot of similarities in the attributes of a master in someone in the video game industry: Brian Fargo. Fargo is highly accomplished in the video game industry and so I decided to examine his path to mastery to see how it aligned with Greene's theories. I went through interviews, presentations, and even my own personal correspondence with Mr. Fargo to examine the path of success in the video game industry.

A professional blog chronicling my work in the video game industry as a game designer and environmental artist. I have a BS in Game Art and a MS in Game Design from Full Sail University.

Total Pageviews

Wednesday, July 18, 2018

Thursday, July 12, 2018

Creating LOD Planes

A constant struggle in the art world

involves fine-tuning Level of Detail, or LOD. On a current project, I was

presented with a scene that would need thousands of iterations of assets, all

while supporting 90 FPS (the target FPS for VR). I came up with a unique

solution that works, because this scene is laid out inside an aircraft,

essentially a long hallway. Since you only approach objects from the front or

the rear, I decided to use planes for the last LOD level, since they only consist of 4 tris. Here is my process.

When you are

ready to create the last LOD level, set up your scene for the

plane capture

process. Remove all unwanted objects from the scene to stop them from being

rendered.

Create a

backdrop that will contrast well with the asset in question. For most assets, a

green screen works well, but a blue or red screen can also be used. I simple

set up two planes, and applied a StingrayPBS material with a flat color. Be

sure to get rid of all metal and roughness values to create a “flat” material.

Next, set up the lighting for your scene, by selecting Lighting<Use Flat Lights. This will help you get a render shot

that doesn’t interact with light. Since this image plane will be used for the

last level of the LOD group, it is important to have a clean image.

Open up the

Render Viewport and then set the Render type to Maya Hardware 2.0 Then, open up the Render Settings window.

Once opened,

change the Renderable Camera to the

desired one. For most assets, the Front camera will be the best to get a

straight shot of the asset. Additionally, consider rotating the object 180

degrees for the rear render shot, instead of setting up a new camera for a Rear

shot.

Next, change

the image size to HD 720 or better to ensure

you are working with a clean, crisp image.

In the

Render Settings, you also must change the lighting

mode to Default mode. If your render shot is black, its most likely because

this setting is set to “All.” Default

will tell it to use the same lighting you set up in the editor (in this case,

Flat Lighting).

After

getting the setup process complete, you can now render your image. Typically,

its easiest to use the orthographic view for these shots. After you are satisfied,

we can move on to exporting out your render shot.

On the Render View, open up

File>Save Image.

Make sure to

select the options button on the

Save Image tab.

This will

open up another window. Change the save mode to “Color Managed Image – View Transform embedded.”

Once done

with the Save Settings, go back and this time click File<Save Image. Name the asset in a logical manner, describing

the asset, and what shot you are capturing. Next, change the file type to PNG.

Next, bring the image into Photoshop.

Get rid of the background through smart selection or Select< Color Range. It

should be easy to grab all the green and then delete it out of the image. Once

all the background is cleaned from the image, select what’s left and create an

alpha channel.

When you are

satisfied with the image and have an appropriate alpha channel, you can export

the image out. Remember when exporting to always save as a Targa or another

format that allows for 32 bits (an alpha channel). Now you are ready to apply

the image to a plane to be used for the desired LOD level. Test in Maya and if

satisfied, add to the LOD group and name accordingly. Export the LOD group to

Unity. In Unity, make sure the texture is set to pull alpha from transparency and the material is set to Cutout. If one is set up already, add

the LOD group to the desired prefab. If no prefab is created yet, create an

empty game object to house the LOD group and create your own prefab. You can

change the LOD distances in the inspector window for testing purposes. It is

recommended to have the distances between LOD switches stay small to

effectively test the transitions. After successful testing you can change the

LOD switch points back to the standard.

That’s it!

Now you have a LOD level that consists of 4 tris (one plane) and still looks like

a high-fidelity model. Granted, this only works because of the environment

layout (an aircraft is essentially a vey long hallway). If you are working in a

more open environment where the player can approach an asset from many

different angles you might have to change your approach. As it stands, for this

project it was a great solution as the player can really only approach an asset

from the front or the rear. No angled vectors that might ruin the illusion. Here's a render shot:

Here you can

see there the plane LOD starts to kick in, about 6 or 7 rows back. At this

distance, the difference is almost imperceptible. It’s a good solution for the

design of the level.

Hope this

little bit of magic will help you in your project or inspire you to something

similar. If so, make sure to share your work and as always, comments and

feedback are encouraged!

Tools Used:

Maya 2018, Unity 2018, Photoshop CC

Tuesday, July 3, 2018

Holograms: Breathing Effect

Been awhile, I've been busy with a new job for the last 9 months. We are currently working on a product that utilizes Unity, the Hololens, and augmented reality. While there are a lot of pieces to this project, there was one "problem space" we stumbled across that I believe is worth sharing with the community. I will state that since this project is still a work in progress and propitiatory, I am not able to show images of the actual results we are using.

The Process: When we first approached this issue, we did extensive research online. We read technical docs for the Hololens, reached out to online communities, and spoke to others in the industry. Everyone told us it was impossible to have a hologram affect the real world. For this project, I wanted an effect that makes movement under the skin, like lungs expanding and contracting. The roadblocks here were that we couldn't use animated models, but rather needed an effect that would always cause this distortion. This would allow to scan ANY person with the Hololens and cause distortion, based off markers.

I started off with two capsules, basic shapes to represent the lungs. Since we need this effect to actually impact the world behind it I established the primary functions that the effect would have to have. First, it would need to be able to expand and contract, mimicking actual lung movement. Second, these would need to be public variables that could be altered based on incoming data. Third, there needed to be some sort of texture to the effect that will add to the overall distortion.

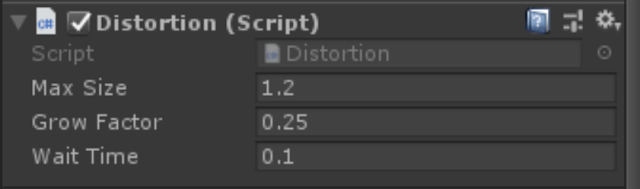

I broke this up into two main functions, movement and distortion. For the expanding and contracting effect I decided to make a script I could attach to my capsules. Now I have mainly an art background, so don't be intimidated by any code I implemented; its fairly simplistic. Essentially, this script has three public variables that allow you to control its grow size, rate of growth, and pause time (lungs have a slight pause in between expanding and contracting so this was necessary).

That's it. That simple script gives you these variables in the inspector and they can be fine-tuned for specific purposes. Max Size lets you put in a positive or negative value. Be warned though, this assumes the scale of the object is 1,1,1. So any scaling should be done prior to Unity. Grow Factor is the speed at which the object will grow. Last, Wait Time indicates a delay before the object shrinks back to its original size. This can be set to 0 if not needed, depending on the function.

After applying this script I determined it worked quite well. I could have two separate lungs (left and right) and apply different variables so one behaves "normal" while the other indicates a tension pneumothorax. It allows for a multitude of different behaviors depending on how you play with the settings. This handles the movement function of the effect, but we still need to tackle distortion.

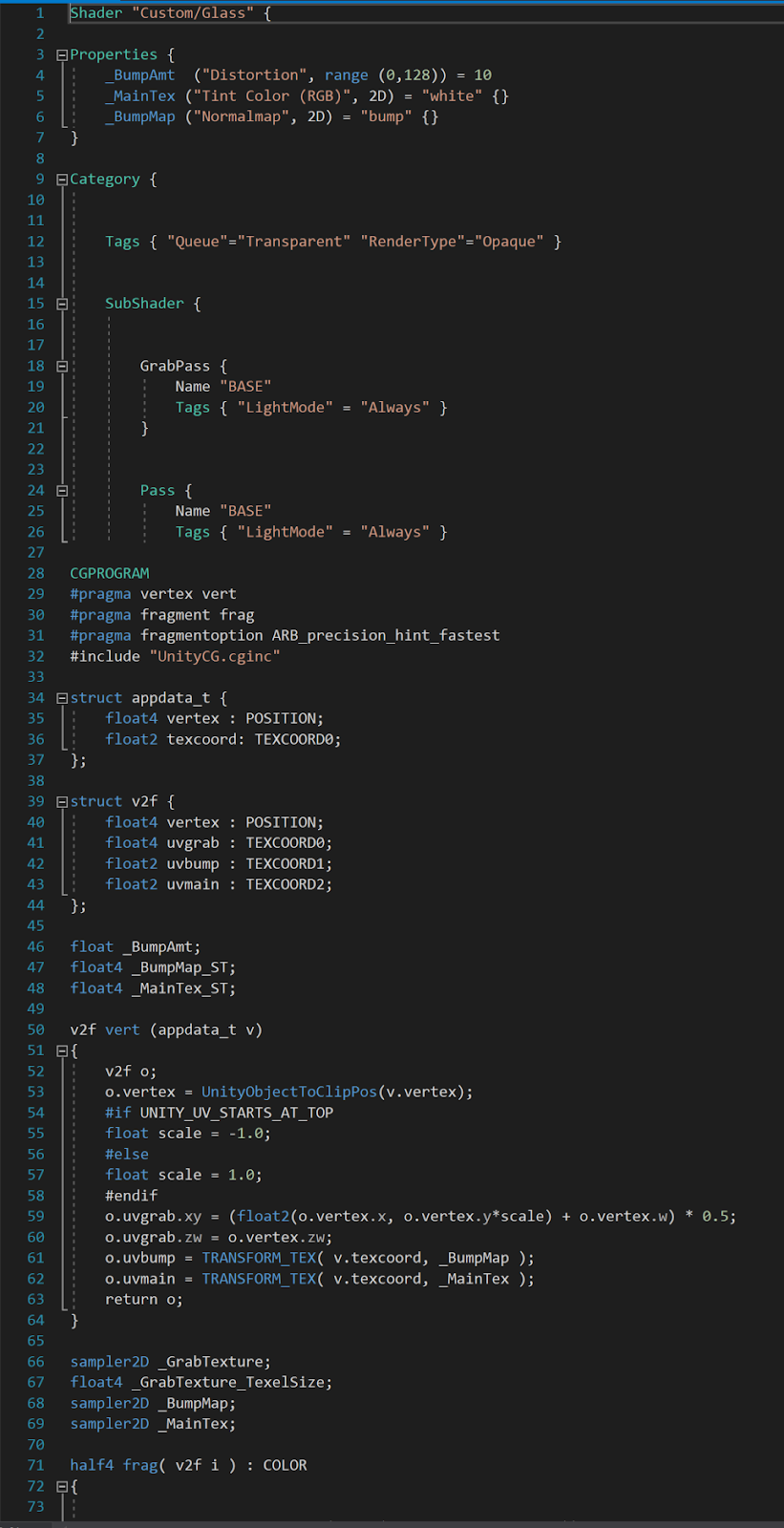

In order to heavily distort things viewed through the effect, I created a custom shader. As shaders go, its nothing terribly complicated.

This shader works by grabbing a snapshot of the textures behind it and then running a distortion function on them, supplied by the user. So the output for this shader allows for two maps, an RGB Tint Color and a Normal Map. These maps will add to the distortion of things behind them.

Next, we flattened the capsules from the effect so they wouldn't collide with anything else around them. Then I placed an object behind the effect that has a transparent shader. So that object isn't visible in AR, but exists and thus allows for the effect to be seen. By placing this object behind the effect, it was clearly visible in AR. Here is the same effect applied to a different asset. You can see the distortion happening inside the highlighted area. This will also expand and contract when played.

So ultimately, we found a cheat that allowed us to push forward and have a successful effect that distorts things in the real world. It was a huge challenge, took several weeks of work, but ultimately it was very rewarding to get it working. Again, I apologize I can't currently show images, but after the product has shipped I can come back and add those in.

The Problem Space: How do we affect the physical world through holograms?

The Process: When we first approached this issue, we did extensive research online. We read technical docs for the Hololens, reached out to online communities, and spoke to others in the industry. Everyone told us it was impossible to have a hologram affect the real world. For this project, I wanted an effect that makes movement under the skin, like lungs expanding and contracting. The roadblocks here were that we couldn't use animated models, but rather needed an effect that would always cause this distortion. This would allow to scan ANY person with the Hololens and cause distortion, based off markers.

I started off with two capsules, basic shapes to represent the lungs. Since we need this effect to actually impact the world behind it I established the primary functions that the effect would have to have. First, it would need to be able to expand and contract, mimicking actual lung movement. Second, these would need to be public variables that could be altered based on incoming data. Third, there needed to be some sort of texture to the effect that will add to the overall distortion.

I broke this up into two main functions, movement and distortion. For the expanding and contracting effect I decided to make a script I could attach to my capsules. Now I have mainly an art background, so don't be intimidated by any code I implemented; its fairly simplistic. Essentially, this script has three public variables that allow you to control its grow size, rate of growth, and pause time (lungs have a slight pause in between expanding and contracting so this was necessary).

That's it. That simple script gives you these variables in the inspector and they can be fine-tuned for specific purposes. Max Size lets you put in a positive or negative value. Be warned though, this assumes the scale of the object is 1,1,1. So any scaling should be done prior to Unity. Grow Factor is the speed at which the object will grow. Last, Wait Time indicates a delay before the object shrinks back to its original size. This can be set to 0 if not needed, depending on the function.

After applying this script I determined it worked quite well. I could have two separate lungs (left and right) and apply different variables so one behaves "normal" while the other indicates a tension pneumothorax. It allows for a multitude of different behaviors depending on how you play with the settings. This handles the movement function of the effect, but we still need to tackle distortion.

In order to heavily distort things viewed through the effect, I created a custom shader. As shaders go, its nothing terribly complicated.

This shader works by grabbing a snapshot of the textures behind it and then running a distortion function on them, supplied by the user. So the output for this shader allows for two maps, an RGB Tint Color and a Normal Map. These maps will add to the distortion of things behind them.

You can plug in the desired maps for the normal and tint desired. There is also a public function slider that lets you control the impact of the distortion. This shader worked great in combination with the movement script already attached. It distorted the objects behind it, and the expanding and contracting of the effect lead to the appearance of expanding and contracting on other objects. I was able to successfully test in Unity and in VR on the HTC Vive. However, the real challenge was about to begin: how do I transfer this to a system that will affect the real world around it?

The short answer is, it isn't possible. There are a few exceptions. You could mount a forward facing camera on the Vive, and then overlay the holograms on that captured environment. This will however cause a good deal of lag, so we didn't consider it as an option. So we were left with coming up with a way of making it appear to affect the environment. At this point, I got sucked down a rabbit-hole. I turned to one of our developers (a not-artist, if you will) and we were able to work together, by combining the functions I had with one of his own creation. He supplied a shader that would hide all objects rendered behind it. This way the affect wont be seen on other objects in the scene. Here is that shader.

So ultimately, we found a cheat that allowed us to push forward and have a successful effect that distorts things in the real world. It was a huge challenge, took several weeks of work, but ultimately it was very rewarding to get it working. Again, I apologize I can't currently show images, but after the product has shipped I can come back and add those in.

Subscribe to:

Posts (Atom)